Load Base Model

Introduction #

The playground is your space to experiment with various base models by combining them with your datasets and specific instructions to forge a snapshot. A snapshot integrates a large language model (LLM), a set of prompts, and, optionally, a dataset, all of which you can save and reuse for tasks like fine-tuning.

Part 2: Load Base Model #

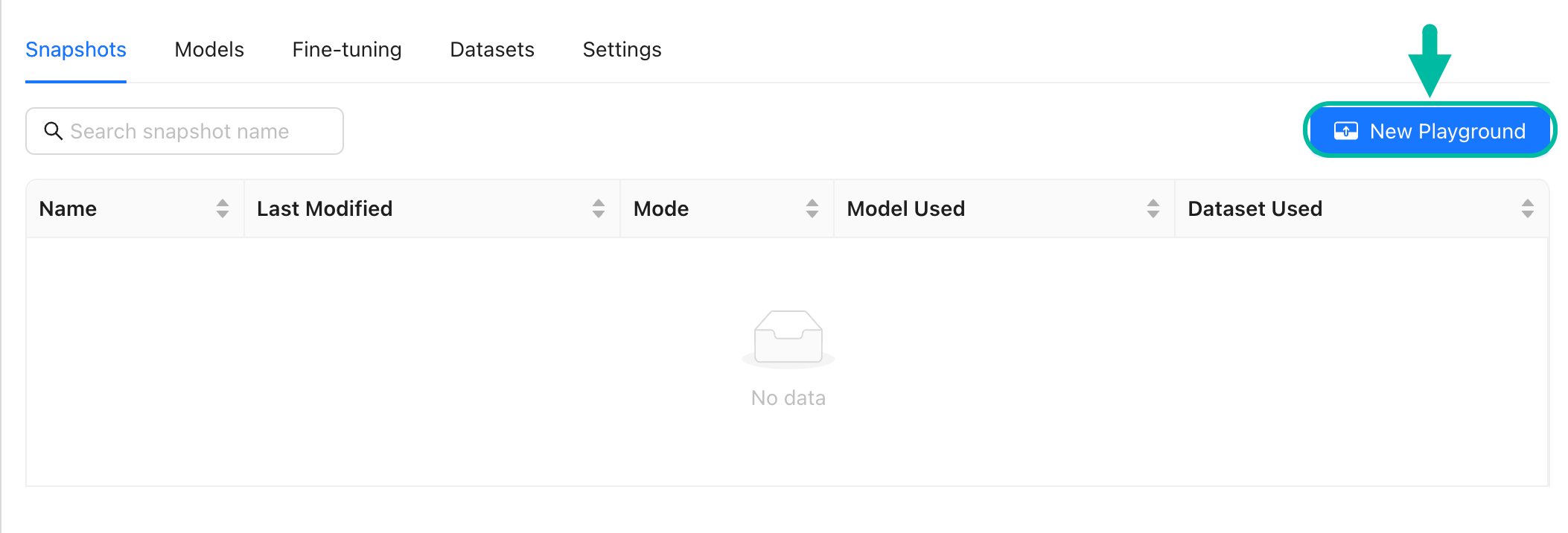

Open the Playground #

- Navigate to the Snapshots tab in your project and select New Playground.

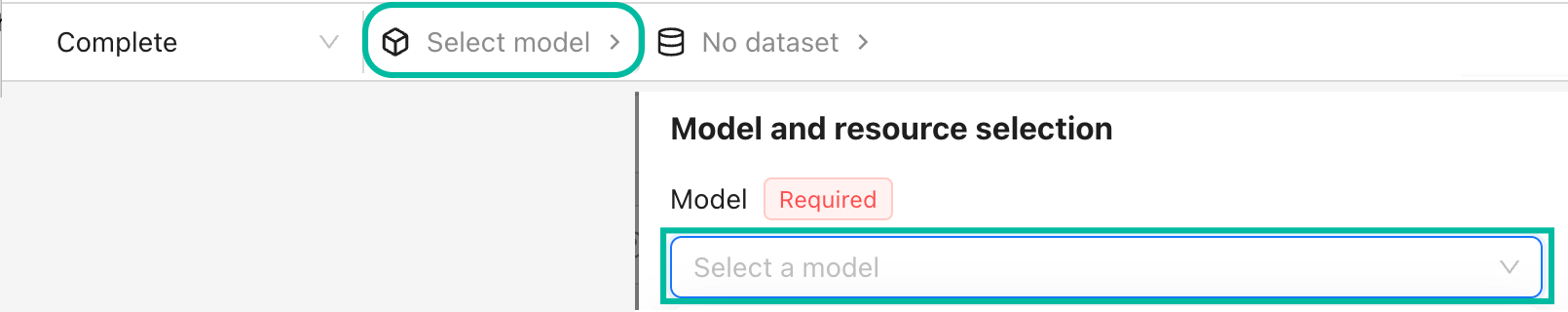

Configure Model Properties #

- Open the Select model drawer and then pick a base model from the list, such as

falcon-7b-instruct main.falcon-7b-instruct mainis already fine-tuned for taking instructions.

- Choose a Resource pool. Opt for an

a100resource pool of NVIDIA A100s. NVIDIA A100s are generally recommended for inference and fine-tuning However, if your resources are limited, you can use NVIDIA V100s or NVIDIA T4s. - In Model generation properties, set Max new tokens to

2000. - Select Load.

Configure Model Generation Properties #

We’re actually going to leave the default settings for this section in place for now. (We’ll come back to these later in the tutorial.)

Here’s a brief overview of what each property does:

| Property | Description |

|---|---|

| Temperature | The higher the temperature, the more creative the outputs. |

| Max New Tokens | The maximum number of tokens to generate. |

| Top K | Selects the next token by selecting from the k most probable tokens. Higher k increases randomness. |

| Top P | Selects the next token by selecting from the smallest possible set of tokens whose cumulative probability exceeds top p. Values range between 0.0 (deterministic) to 1.0 (more random). We will always keep at least 1 token. |

Recap #

- You have successfully set up the playground with a base model specifically fine-tuned for instruction-based tasks.

- You have also chosen an appropriate resource pool to ensure optimal model performance.