Initiate Canary Rollout

Canary rollouts enable you to safely test your new model version’s performance alongside an existing model version that is currently deployed by directing a percentage of the traffic to the new model. This allows you to compare the performance of the new model with the existing model before fully deploying the new model.

Before You Start #

- You must have already created a deployment.

- You must have either a new packaged model or a new version of the existing deployed packaged model that you want to test.

Rollout Behavior #

How a canary rollout behaves depends on the traffic percentage (--canary-traffic-percent) set on the deployment for the new model version. The traffic percentage you provide maps to the following behaviors:

100%: Triggers a full, immediate rollout to the new version instead of performing a canary rollout. The prior model version stops serving traffic as soon as the new version is properly serving requests.1-99%: Triggers a canary rollout where both versions serve requests; the defined canary traffic percentage is sent to the new instance once it has become ready.0%: Cancels the canary rollout and reverts all traffic back to the prior model version deployed.

Once you are satisfied with the new model version’s performance, complete the rollout by updating the canary traffic percentage to 100%.

0%, the inference service will remain present but handle no requests and continue to consume any GPU resources assigned until you perform another rollout. This can be either a 100% roll out of the prior version, or a new rollout of another model version.How to Initiate a Canary Rollout #

Via the UI #

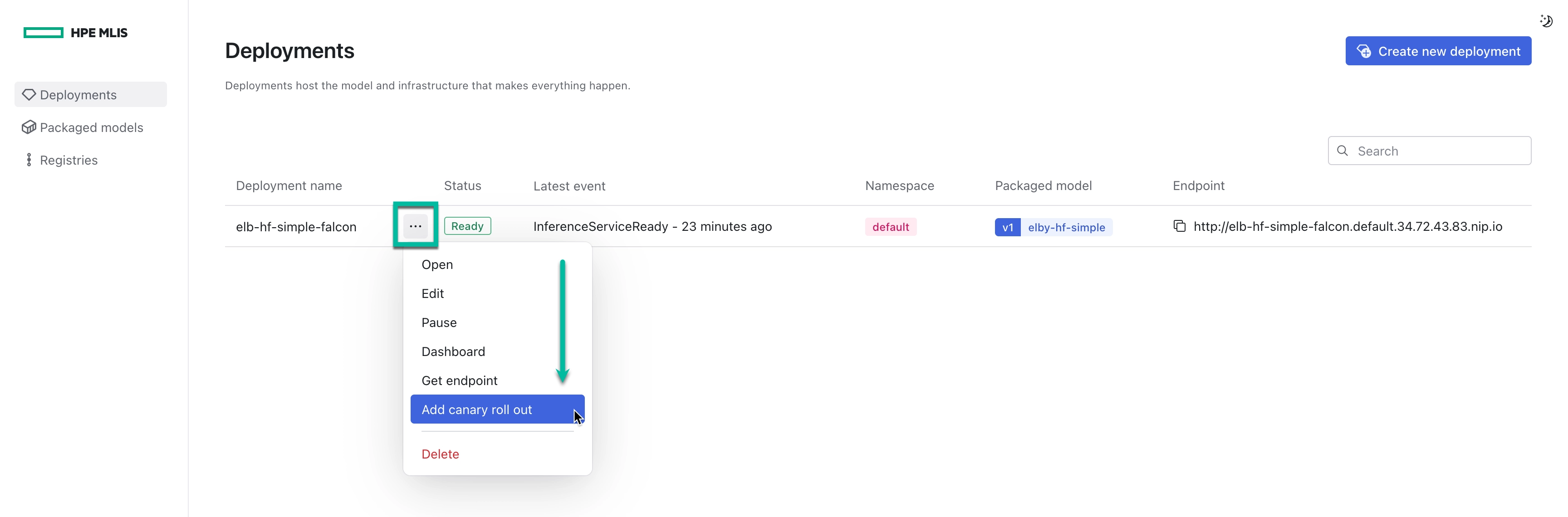

- Sign in to HPE Machine Learning Inferencing Software.

- Navigate to Deployments.

- Select the ellipsis next to the deployment you want to initiate a canary rollout for.

- Select Add canary rollout.

- Select which Packaged Model you want to roll out.

- Select how much traffic you want to direct to the new model as a percentage.

- Select Done.

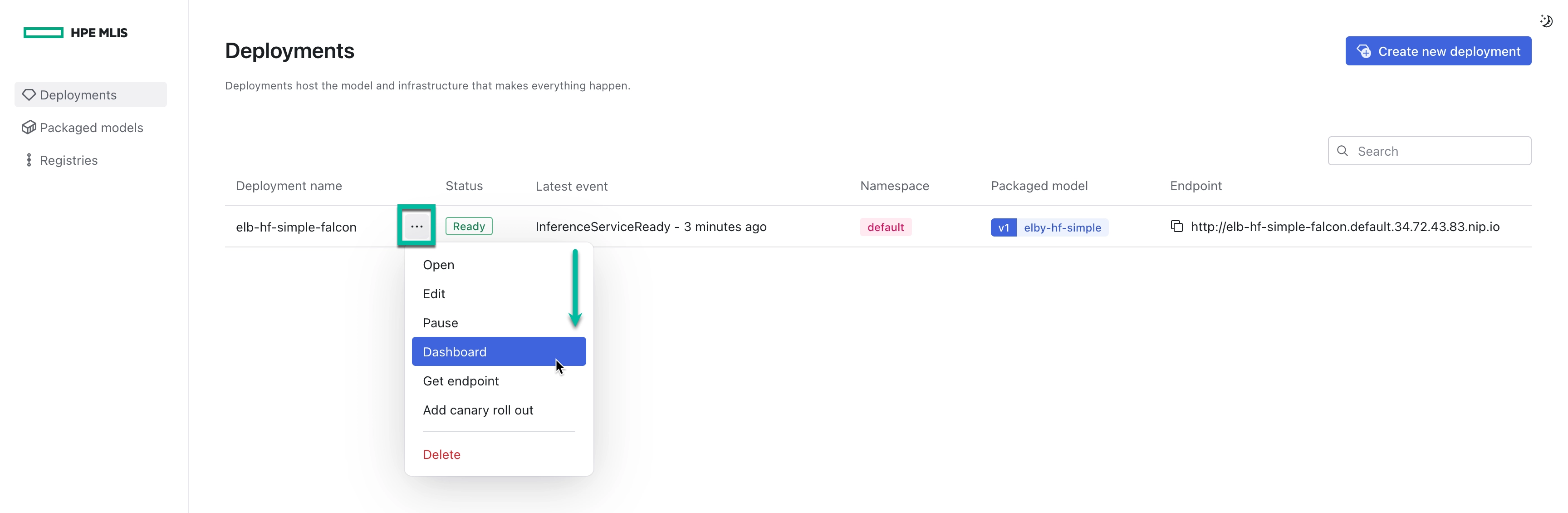

The rollout proccess will begin immediately. You can monitor and analyze the traffic between the two models by navigating to your deployment’s Grafana dashboard.

Via the CLI #

- Sign in to HPE Machine Learning Inferencing Software.

aioli user login <YOUR_USERNAME> - Update a deployment with the following command:

aioli deployment update <DEPLOYMENT_NAME> \ --model <PACKAGED_MODEL_NAME> \ --canary-percentage <CANARY_PERCENTAGE> - Wait for your deployment to reach

Readystate.aioli deployment show <DEPLOYMENT_NAME>arguments: [] autoScaling: maxReplicas: 1 metric: rps minReplicas: 1 target: 0 canaryTrafficPercent: 5 environment: {} goalStatus: Ready id: 857ac422-ccf3-42a9-8d69-5af4cdc859dc model: elby-hf-simple modifiedAt: '2024-05-20T18:56:41.384852Z' name: elb-hf-simple-falcon namespace: default secondaryState: endpoint: http://prev-elby-hf-simple-falcon-predictor.default.34.72.43.83.nip.io nativeAppName: elby-hf-simple-falcon-predictor-00001 status: Ready trafficPercentage: 95 security: authenticationRequired: false state: endpoint: http://elby-hf-simple-falcon.default.34.72.43.83.nip.io nativeAppName: elby-hf-simple-falcon-predictor-00002 status: Ready trafficPercentage: 5 status: Ready

Via the API #

- Sign in to HPE Machine Learning Inferencing Software.

curl -X 'POST' \ '<YOUR_EXT_CLUSTER_IP>/api/v1/login' \ -H 'accept: application/json' \ -H 'Content-Type: application/json' \ -d '{ "username": "<YOUR_USERNAME>", "password": "<YOUR_PASSWORD>" }' - Obtain the Bearer token from the response.

- Get a list of deployments to find the deployment ID.

curl -X 'GET' \ '<YOUR_EXT_CLUSTER_IP>/api/v1/deployments' \ -H 'accept: application/json' \ -H 'Authorization : Bearer <YOUR_ACCESS_TOKEN>' - Get the deployment ID from the response.

- Use the following cURL command to submit a canary rollout put request.

curl -X 'PUT' \ '<YOUR_EXT_CLUSTER_IP>/api/v1/deployments/{id}' \ -H 'accept: application/json' \ -H 'Content-Type: application/json' \ -H 'Authorization: Bearer <YOUR_ACCESS_TOKEN>' \ -d '{ "model": "<PACKAGED_MODEL_NAME>", "canaryPercentage": <CANARY_PERCENTAGE> }'